Data Reliability in Machine Learning: Ensuring Trustworthy Model Outputs

April 2, 2024

In the rapidly evolving landscape of machine learning, organizations are increasingly relying on data-driven insights to fuel their decision-making processes. However, the accuracy and reliability of these insights hinge on the quality and trustworthiness of the underlying data. As machine learning models become more pervasive across industries, ensuring data reliability has emerged as a critical imperative for achieving reliable and trustworthy model outputs.

The consequences of unreliable data in machine learning can be far-reaching, leading to flawed decisions, compromised outcomes, and potentially severe implications across various domains, such as finance, healthcare, and public policy. This comprehensive guide delves into the intricacies of data reliability in machine learning, exploring its challenges, strategies, and best practices to help organizations navigate this increasingly complex landscape.

The Role of Data in Machine Learning

At the heart of any machine learning model lies the training data. Models rely on vast amounts of data to learn patterns, relationships, and make predictions or decisions. However, the quality and reliability of the training data directly impact the accuracy and trustworthiness of a model’s outputs.

Consider a healthcare scenario where a machine learning model is trained on patient data to predict the likelihood of developing a certain condition. If the training data contains inaccuracies, such as incorrect diagnoses or missing information, the model’s predictions could be flawed, potentially leading to misdiagnosis or inappropriate treatment recommendations.

In the finance sector, unreliable data in credit risk assessment models can lead to unfair lending decisions, potentially discriminating against certain groups and exposing institutions to regulatory scrutiny. Similarly, in the realm of autonomous vehicles, unreliable data used for training perception and decision-making models could have severe consequences, jeopardizing passenger safety and public trust.

What Is Data Reliability in Machine Learning?

Data Reliability

Data reliability in machine learning refers to the extent to which the data used for training and inference can be trusted to produce accurate and consistent results. It encompasses various aspects, including data quality, completeness, consistency, and timeliness, ensuring that the data accurately represents the real-world phenomena it is intended to capture.

Key Components of Reliable Data

Reliable data in machine learning should possess the following key components:

- 1. Accuracy: The data should correctly represent the real-world entities or events it describes, free from errors or inaccuracies.

- 2. Completeness: The data should encompass all relevant attributes and instances required for the machine learning task, without missing or incomplete information.

- 3. Consistency: The data should be coherent and free from contradictions, adhering to established standards and formats.

- 4. Timeliness: The data should be up-to-date and reflect the current state of the phenomena it represents, ensuring that the machine learning models are trained on the most recent and relevant information.

Maintaining these key components is crucial for ensuring that machine learning models can learn accurate patterns and make reliable predictions or decisions.

Challenges to Data Reliability in Machine Learning

While the importance of data reliability is widely recognized, achieving it in practice can be challenging due to various factors:

Data Quality Issues

Common data quality issues, such as inaccuracies, errors, and missing or incomplete data, can severely undermine the reliability of machine learning models. These issues can arise from various sources, including human error, sensor malfunctions, or inconsistent data collection processes.

For instance, in the context of predictive maintenance for industrial machinery, sensor data containing errors or outliers could lead to inaccurate predictions of equipment failure, resulting in unnecessary maintenance costs or unplanned downtime. Similarly, in the retail sector, incomplete customer data or inaccurate product information can lead to flawed recommendations or inventory management decisions.

Addressing data quality issues requires a multifaceted approach, involving robust data validation techniques, data cleaning processes, and continuous monitoring. Organizations must establish clear data quality standards and implement automated checks and alerts to identify and resolve issues promptly.

Bias and Fairness Concerns

Biases present in the training data can lead to unfair and discriminatory outcomes from machine learning models. These biases can stem from various sources, including historical biases, sampling biases, or subjective human judgments encoded in the data.

For example, if a resume screening model is trained on historical hiring data that exhibits gender or racial biases, it may perpetuate those biases in its recommendations, unfairly disadvantaging certain groups. Similarly, in the criminal justice system, biased data used for risk assessment models can lead to disproportionate sentencing or parole decisions.

Mitigating bias and ensuring fairness in machine learning requires a combination of technical solutions and organizational commitment. Techniques such as adversarial debiasing, reweighting training data, and constrained optimization can help reduce biases, while regular audits and fairness assessments are crucial for identifying and addressing potential issues.

Security and Privacy Risks

Machine learning often involves sensitive data, such as personal information or proprietary data. Ensuring the security and privacy of this data is crucial to maintain data reliability and comply with relevant regulations, such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA).

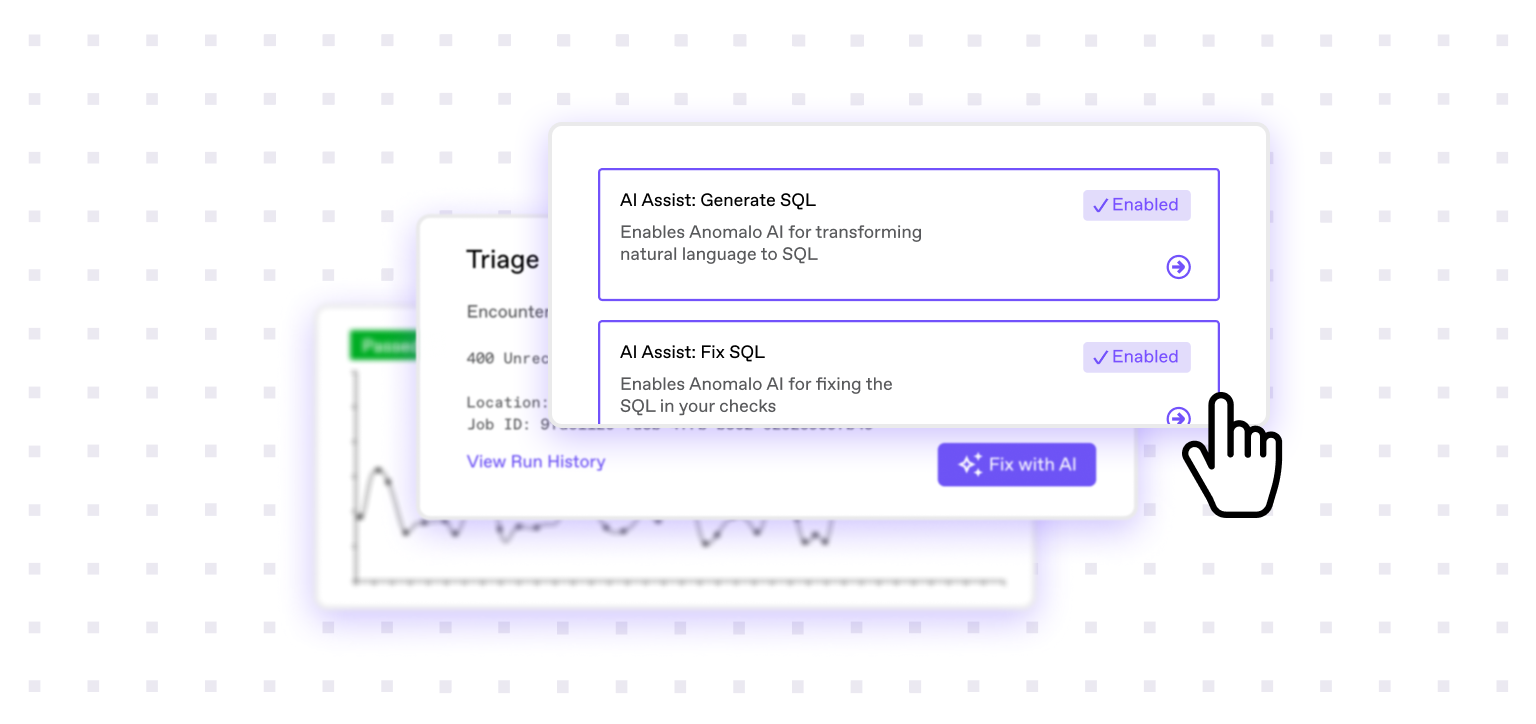

How Anomalo Can Mitigate These Challenges

Anomalo’s AI-based data quality monitoring uses unsupervised machine learning to detect anomalies in data. It also provides table observability, low-code validation rules, key metrics monitoring, and much more, to ensure that your organization’s data is trustworthy and reliable for machine learning use cases.

When Anomalo detects a change in your data that differs from historical patterns, it will alert you and provide a root-cause analysis so that your team can solve the issue at the source, before downstream models are affected.

Strategies for Ensuring Data Reliability

To address the challenges of data reliability in machine learning, organizations should adopt a comprehensive approach that encompasses the following strategies:

Data Quality Assurance

Implementing robust data quality assurance processes is essential for ensuring data reliability. This involves validating data through techniques such as constraint checking, outlier detection, and data profiling. Additionally, data cleaning and pre-processing steps, such as handling missing values, removing duplicates, and standardizing formats, can significantly improve data accuracy and quality.

Data quality assurance should be integrated throughout the machine learning lifecycle, from data ingestion to model deployment and monitoring. Automated data validation pipelines can continuously check for data quality issues, alerting data engineers and scientists to potential problems before they impact model performance.

Moreover, organizations should establish clear data quality metrics and thresholds, such as acceptable error rates or completeness levels, to ensure consistent and measurable data reliability standards. Regular data quality reports and audits can provide visibility into the state of data quality and enable timely remediation actions.

Bias Detection and Mitigation

To address bias and fairness concerns, organizations should conduct regular fairness assessments on their machine learning models and training data. Various algorithmic approaches, such as adversarial debiasing, reweighing, and constrained optimization, can be employed to mitigate biases present in the data or the model itself.

Security Measures

Maintaining data reliability in machine learning also requires implementing robust security measures. Encryption, anonymization, access controls, and continuous monitoring can help protect sensitive data from unauthorized access, ensuring data integrity and compliance with relevant regulations.

Tools and Technologies for Data Reliability

To support these strategies, organizations can leverage a variety of tools and technologies specifically designed to enhance data reliability in machine learning:

Data Quality Tools

Data quality tools, such as Anomalo’s AI-based data quality monitoring platform, can automate the process of assessing and ensuring data quality. These tools can perform comprehensive data profiling, identify anomalies, and provide alerts, triage, and data lineage understanding when data quality issues arise.

Anomalo’s platform leverages advanced machine learning techniques to learn consistent data patterns and detect deviations from expected behavior, enabling early identification of data quality issues. Furthermore, it provides interactive dashboards and reporting capabilities, allowing data teams to visualize and analyze data quality metrics, streamlining the process of root cause analysis and remediation.

By integrating these data quality tools into their machine learning pipelines, organizations can proactively monitor and ensure data reliability, reducing the risk of flawed model outputs and ensuring consistent, trustworthy decision-making.

Bias Detection and Mitigation Platforms

Several platforms and frameworks, such as IBM’s AI Fairness 360 and Google’s What-If Tool, are dedicated to detecting and mitigating bias in machine learning models. These tools can help organizations identify potential biases and apply debiasing techniques to ensure fair and equitable outcomes.

AI Fairness 360 is an open-source toolkit that provides a comprehensive suite of algorithms and metrics for detecting and mitigating biases in machine learning models. It supports various debiasing techniques, such as adversarial debiasing and reweighting, and offers tools for evaluating model fairness across different demographic groups.

Google’s What-If Tool is an interactive visual interface that allows users to explore the behavior of machine learning models, including potential biases and fairness concerns. It enables users to simulate changes to the input data or model parameters and observe the impact on model outputs, providing valuable insights into the fairness and robustness of the model.

Best Practices for Maintaining Data Reliability

In addition to leveraging the right tools and technologies, organizations should adopt best practices to foster a culture of data reliability:

1. Establish Data Governance Policies

Implementing clear data governance policies is crucial for maintaining data reliability throughout the machine learning lifecycle. These policies should define standards, roles, and responsibilities for data quality, security, and ethical data management practices.

Data governance policies should cover areas such as data collection and acquisition processes, data validity and cleaning procedures, access controls and data lineage tracking, and procedures for addressing data quality issues or biases. Additionally, these policies should align with relevant industry regulations and best practices, such as GDPR or HIPAA for healthcare data.

2. Implement Continuous Monitoring and Auditing

Data reliability is not a one-time endeavor; it requires continuous monitoring and auditing to ensure that data quality and fairness are maintained over time. Organizations should implement automated monitoring systems that continuously track data quality metrics, model performance, and potential biases.

Regular audits should be conducted to assess the effectiveness of data reliability measures and identify areas for improvement. These audits should involve stakeholders from various teams, including data engineers, data scientists, domain experts, and compliance officers, to ensure a comprehensive evaluation from multiple perspectives.

3. Foster Collaboration

Maintaining data reliability in machine learning requires collaboration between various stakeholders, including data scientists, data engineers, domain experts, and decision-makers. By fostering open communication and knowledge sharing, organizations can ensure that domain knowledge is effectively incorporated into the machine learning process, and potential data reliability issues are identified and addressed from multiple perspectives.

Cross-functional teams should be established to facilitate collaboration and knowledge transfer. For example, data scientists can work closely with domain experts to understand the nuances and complexities of the data, while data engineers can provide insights into data acquisition and processing pipelines, identifying potential sources of quality issues.

The Intersection of Data Reliability and Model Explainability

The Importance of Interpretable Models

As machine learning models become more complex and opaque, the need for interpretability and transparency has gained significant importance. Interpretable models not only foster trust in the model’s outputs but also enable stakeholders to understand the decision-making process, identify potential biases, and ensure compliance with relevant regulations.

How Data Reliability Influences Model Explainability

Data reliability plays a crucial role in enabling model explainability. When the training data is accurate, complete, and free from biases, the model’s decisions and predictions become more interpretable and easier to explain. Conversely, if the data is unreliable, the model’s reasoning and outputs may become obscured, hindering transparency and trust.

Ensuring Transparency in Model Outputs

To ensure transparency in machine learning model outputs, organizations should adopt techniques such as feature importance analysis, local interpretable model-agnostic explanations (LIME), and counterfactual explanations. These methods can help stakeholders understand the factors influencing the model’s decisions and identify potential biases or errors stemming from unreliable data.

Future Trends in Data Reliability and Machine Learning

As machine learning continues to evolve, data reliability will remain a critical concern. Several trends are expected to shape the future of data reliability in this domain:

- 1. Automated Data Quality Assurance: Advances in automated data quality assurance techniques, leveraging machine learning itself, will enable more efficient and scalable monitoring and validation of data quality.

- 2. Ethical AI and Responsible Data Practices: There will be an increased emphasis on ethical AI practices and responsible data management, with organizations prioritizing fairness, transparency, and accountability in their machine learning models.

- 3. Regulatory Landscape: The regulatory landscape surrounding data privacy, security, and responsible AI will continue to evolve, requiring organizations to adapt their data reliability strategies accordingly.

Conclusion

In the rapidly evolving landscape of machine learning, data reliability is a fundamental pillar that underpins the trustworthiness and reliability of model outputs. Organizations must adopt a proactive approach to data quality, addressing challenges such as poor quality data, bias and fairness concerns, and security and privacy risks.

By implementing robust data quality assurance processes, bias detection and mitigation strategies, and security measures, organizations can enhance the reliability of their machine learning models. Leveraging specialized tools and technologies, establishing data governance policies, fostering collaboration, and embracing best practices are essential steps in this journey.

As machine learning continues to shape decision-making across industries, prioritizing data reliability will not only ensure accurate and fair outcomes but also foster trust and confidence in the adoption of these powerful technologies. By addressing data reliability challenges head-on, organizations can unlock the full potential of machine learning while upholding ethical and responsible practices.

Get Started

Meet with our expert team and learn how Anomalo can help you achieve high data quality with less effort.